21 Oct Step 1 towards TEAL’s Secure Administration Environment (SAE) Training

As you might have seen on our social media channels, we have taken a week off from customer projects to focus on our “next big thing” [LINK] 😊.

We consider Microsoft’s ESAE approach as a foundation for state-of-the-art Enterprise-wide security. Our experience confirms, that many aspects of ESAE require organizational and process changes to successfully use the technologies Microsoft proposes in the ESAE architecture. Beside our Active Directory Assessment which gives our customers a good overview of their security posture and our project work to help our customers implement effective measures to secure their environment, we are in the process of creating a training for Active Directory administrators.

The training will enable the participants to better understand attacks and countermeasures as well as the impact on processes on their day-to-day work. The training will consist of roughly one part theory and three parts hands-on experience.

Each trainee will receive access to his/her own environment. Those environments must be available ad-hoc, quickly, repeatably and requiring only minimal human intervention to setup. During our research we did not find an open source project that fit our need completely, so we decided to do it ourselves 😊. During our Hackathon we were able to create a working Proof of Concept which we want to share with you in this blog post.

Lab (automation) requirements:

Above condenses to the following requirements:

- “Single click” setup for the person who is setting up the labs

- Labs shall be deployable to multiple (cloud) providers in the long run (at least Azure, AWS and Hyper-V)

- The lab infrastructure as well as the configuration state of the systems (aka what is inside the AD etc.) should be modular and based on config files.

- It must be possible to deploy multiple states of the lab (e.g. beginning of the training, start of day 2 etc.)

- The scripts must be usable for all systems in multiple parallel environments. All configuration differences come from config files

Current PoC solution

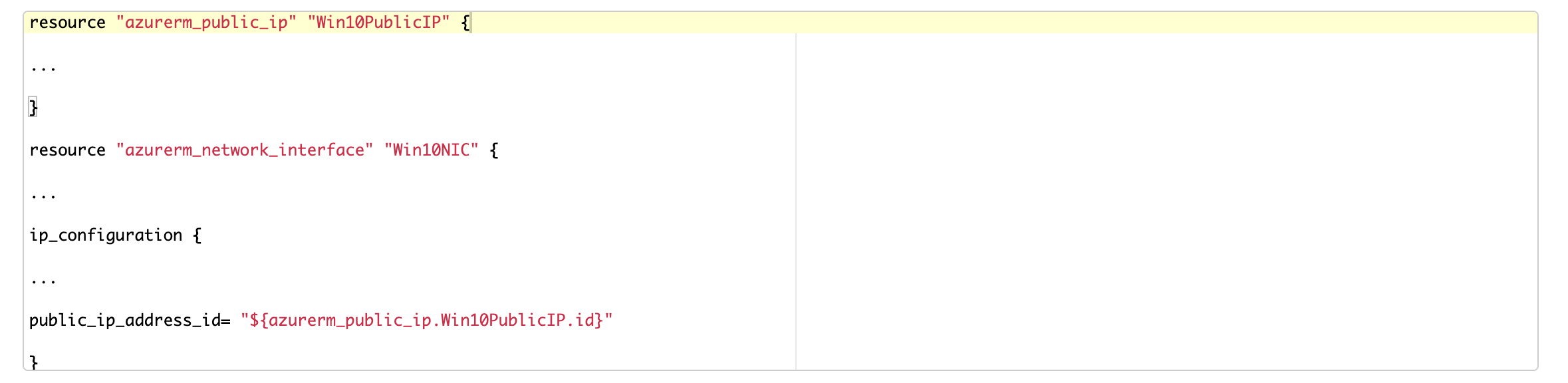

The process to build and destroy the environments will follow Infrastructure-as-Code (IaC) principles. As we want to be able to deploy to multiple providers (in the long run) we chose Terraform as the engine for IaC. In the PoC all network, compute resources and permissions etc. will be provisioned using AzureRM (Azure provider for Terraform).

We do not want to work with custom images or rely on integration tools available only in one of the cloud provider environments (e.g. there is no built-in Puppet agent for Hyper-V, CloudFormation is AWS exclusive, etc.). Also, to keep it simple, for the initial config we will be using Windows-only environments and thus we decided that all machine configuration will be done by PowerShell. But we are keeping in mind that the architecture should allow us to easily expand the lab with Linux systems in the future, using for example Ansible instead of PowerShell.

To achieve such an architecture, while not drowning in PowerShell, the key is proper usage of Terraform’s declarative power to the maximum possible extent. That implies separation of infrastructure orchestration, defined as connectivity definitions and resource permission management, from VM configuration management.

The interesting part of the whole exercise is dependency management between systems’ roles: especially Domain Controller vs Domain Clients. Terraform is built to manage dependencies, but it is only possible based on the outcome of PowerShell that assures AD and User machines configs. There are a few ways of making Terraform respect dependency between resources.

- The first and most obvious one is using an explicit depends_on = [“provider.some.resource.name”]

- The second is to define an implicit dependency, by having one resource use the other during its creation:

The third, a variant of second, is using the module’s outputs as inputs to other definitions.

We will be using all 3 options in different areas of our solutions.

Poc details:

The PoC consists of:

1.Parallel setup of the Azure infrastructure: networking, roles, permissions and VMs. This will be set-up as Modules, used by the following points.

2.On top of the Modules above, preparing the Configuration of Active Directory Domain Controllers, using Azure Extensions running a PowerShell script. Terraform can use this declaratively, when the Extension provides exit codes.

3.On top of the Modules above and declaring point 2 an explicit dependency (the first option from above), preparing config of Domain User machines.

Finally, environment state transfer between exercises will be happening mostly on AD config (without need of infra change) and thus done using imperatively using PowerShell scripts.

In the following repository (https://github.com/teal-technology-consulting/SAE-Lab) one can find the simplified Terraform and PowerShell code to test such a setup with one basic Module, dependencies and passing exit codes from PowerShell to Terraform for the control flow.

Tell us if you like our approach, or would have done something differently, we would love to hear your opinions!

Source: freepik.com

LATEST POSTS

-

(E) SAE DEEP DIVE SERIE Part 2 – Secured VMs in an ESAE environment with VMWare

In our January blog, we started an SAE deep dive series and explained how to use Hyper-V as a secure hypervisor in an (E)SAE scenario. Since by far not all our customers use Hyper-V, but many also use VMWare...

15 June, 2020 -

(E) SAE Deep Dive Series Part 1: Hyper-V Host Guardian Service (HGS) and Shielded VMs in an EASE Environment

After the success of the first ESAE series, we decided to launch a deep dive series in which we go into a little more detail on various measures....

16 January, 2020 -

(E) SAE DEEP DIVE SERIES Part 3 – Separate admin accounts

After Hyper-V HGS and VM protection with VMWare, now the third part of our (E) SAE Deep Dive Series follows. Maybe you follow us on LinkedIn, Xing, Facebook, Instagram or Twitter and ...

15 July, 2020